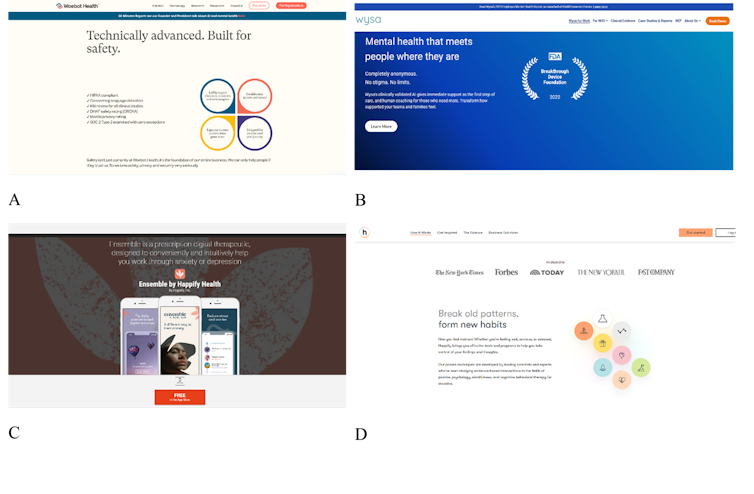

with the present Physical and financial barriers to accessing careindividuals with mental health conditions can refer. Artificial Intelligence (AI) powered chatbots for mental health relief or assistance. Although they usually are not approved as medical devices by the US Food and Drug Administration or Health Canada, the appeal of using such chatbots may come from them. 24/7 availability, personalized support and marketing of cognitive behavioral therapy.

However, users may overestimate the advantages of treatment and underestimate the restrictions of using such technologies. further deteriorating their mental health.. Such a phenomenon will be classified as Treatment Misconceptions Where users can infer that the chatbot is meant to offer them with real therapeutic care.

With AI chatbots, therapeutic misunderstandings can occur in 4 ways, two essential ones: the corporate's practices and the design of the AI technology itself.

(Zoha Khawaja)

Company Methods: Meet your AI self-help expert.

First, the mismarketing of mental health chatbots by firms that decision them “Mental health support“Tools that add”Cognitive behavioral therapy“Can be very misleading since it implies that such chatbots can do psychotherapy.

Not only do it. Chatbots lack the skills, training and experience of human therapists.but labeling them as with the ability to provide “A different way to treatMental illness indicates that such chatbots will be used as alternative methods of in search of treatment.

Such marketing strategies can greatly exploit consumer trust within the health care system, especially once they are marketed to.Close collaboration with physicians“This kind of promoting strategy can result in customers. Disclose very personal and private health information. Without fully understanding who owns their data and who has access to it.

Another sort of therapeutic misunderstanding occurs when a user forms a digital therapeutic alliance with a chatbot. With a human therapist, it is helpful to create one. Strong therapeutic alliance Where each patient and therapist are mutually supportive and agree on desired goals that will be achieved through actions, and Build a bond based on trust and empathy.

Since a chatbot cannot create the identical therapeutic interventions as users can with a human therapist, a digital therapeutic alliance will be created, where users Understands integration with a chatbot.Although a chatbot may not actually grow to be one.

(Zoha Khawaja)

A number of efforts have been made to achieve user trust and strengthen digital therapeutic alliances with chatbots, including giving chatbots Human characteristics Simulating and imitating conversations with real therapists and promoting them “Anonymous” 24/7 companion. which can mimic facets of therapy.

Such an alliance may lead consumers to unwittingly expect patient-provider privacy and confidentiality to be protected as they do their very own health care providers. unfortunately, The more deceptive the chatbot, the more effective the digital therapeutic alliance. will probably be done.

Technical design: Is your chatbot trained to make it easier to?

A 3rd treatment fallacy occurs when users have limited details about potential biases in AI algorithms. Disadvantaged persons are often excluded from the design and development stages of technologies that enable them to realize. Biased and inappropriate responses.

When such chatbots exist. Inability to recognize risky behavior or provide Culturally and linguistically relevant mental health resourcesit could possibly Deteriorating mental health conditions of vulnerable populations who not only face stigma and discrimination; Lack of access to care. A therapeutic fallacy occurs when users may expect a chatbot to offer them with therapeutic advantages but are as a substitute given harmful advice.

Finally, a therapeutic misunderstanding can arise when mental health chatbots are unable to advocate and nurture it. Relative autonomy, an idea that emphasizes that individual autonomy is formed by their relationships and social context. It is then. The physician's responsibility to help the patient restore autonomy By supporting and inspiring them to actively engage in treatment.

AI-chatbots provide a paradox wherein they're. Available 24/7 and promises to improve self-sufficiency. In managing one's mental health. Not only can this make help-seeking behaviors highly isolating and individualistic, nevertheless it also creates a therapeutic misconception where individuals consider they're autonomously taking a positive step to enhance their mental health. are

A false sense of well-being arises where an individual's Social and cultural context and inaccessibility of care Not regarded as contributing aspects to their mental health. This false expectation is further emphasized when chatbots are falsely advertised. “relational agents” who “can form a relationship with people…comparable to that achieved by human therapists.”

Measures to avoid the danger of treatment misunderstanding

Not all hope is lost with such chatbots, as some proactive steps will be taken. Reduce the chance of treatment misunderstandings..

Through honest marketing and regular reminders, consumers will be made aware of a chatbot's limited therapeutic capabilities and encouraged to hunt more traditional types of treatment. Indeed, a physician needs to be made available to those that want to opt out of using such chatbots. Consumers can even profit from transparency about how their information is collected, stored and used.

Active patient involvement must also be considered through the design and development stages of such chatbots, in addition to engagement with multiple experts on ethical guidelines to make sure higher protections for users. Therefore, such technologies will be controlled and controlled.

Leave a Reply